Kontextuelle Banditen: Der nächste Schritt in der Personalisierung

Sehen Sie, wie kontextbezogene Bandits eine intelligentere Personalisierung ermöglichen. Erhalten Sie einen Einblick in Beispiele aus der Praxis, Vorteile und wie die Implementierung von CMAB zu besseren Conversion Rates führt.

Wenn es um Personalisierung geht, sind wir in Zeiten des Wettbewerbs. Wir leben in Zeiten, in denen man uns nicht zufrieden stellen kann. Wir leben in Zeiten, in denen die Aufmerksamkeitsspanne eines Goldfisches begrenzt ist.

Jeder weiß, dass Personalisierung wichtig ist, das ist keine Neuigkeit. Aber wie können Sie wirklich relevante Erlebnisse bieten, die die Conversion fördern, ohne Ressourcen zu verschwenden? Hier kommen die kontextuellen Banditen ins Spiel.

Warum eine intelligentere Personalisierung wichtig ist...

Die Schaffung wirklich personalisierter Erlebnisse in großem Maßstab stellt eine besondere Herausforderung dar. Wenn Sie mehrere Produkte, Zielgruppensegmente und Benutzerattribute zu berücksichtigen haben, erfordern herkömmliche Ansätze einen erheblichen manuellen Aufwand.

Dieregelbasierte Personalisierung erfordert eine lange Einrichtungszeit, um die richtigen Bedingungen und das Targeting für jedes Segment zu konfigurieren. Um herauszufinden, welche Erlebnisse bei bestimmten Nutzereigenschaften auf Resonanz stoßen, müssen Sie oft viel raten und testen.

Hier kommen kontextuelle Banditen ins Spiel. Durch die Auswahl relevanter Benutzerattribute lernen kontextbezogene Bandits automatisch, welche Erlebnisse für verschiedene Zielgruppen am besten geeignet sind. Sie liefern wertvolle Erkenntnisse und maximieren die Conversions während der gesamten Laufzeit.

Was ist ein kontextbezogener Bandit?

Der Begriff"Mehrarmiger Bandit" stammt von der klassischen Analogie zu Spielautomaten (dem "einarmigen Banditen").

Stellen Sie sich ein Casino mit mehreren Spielautomaten vor. An welchem spielen Sie, um Ihren Gewinn zu maximieren? Das ist die grundlegende Herausforderung.

Die kontextuellen Banditen gehen noch einen Schritt weiter, indem sie berücksichtigen, wer den Hebel betätigt. Sie nutzen Benutzerdaten, um bessere algorithmische Entscheidungen zu treffen und eine 1:1 Personalisierung zu ermöglichen. Das maschinelle Lernmodell gleicht die Auswirkungen auf Ihre primäre Kennzahl mit den Daten ab, die es über jeden Besucher hat (den Kontext).

Ein kontextbezogener Mehrarmiger Bandit bietet die beste Variante für jeden Besucher auf der Grundlage seines einzigartigen Profils zu diesem bestimmten Zeitpunkt. Dies variiert für verschiedene Besucherprofile, da das Ziel darin besteht, für jeden Besucher in jeder Sitzung die maximale Wirkung zu erzielen.

Anstatt mühsam jede Variante auf verschiedene Benutzerarchetypen abzubilden (d.h. statisches regelbasiertes Targeting manuell zu konfigurieren), können Sie sich darauf verlassen, dass der kontextuelle Bandit diese Entscheidungen genauer für Sie trifft.

Von mehrarmigen Banditen zu kontextuellen Banditen...

Was unterscheidet kontextuelle Banditen von mehrarmigen Banditen? Der Kontext.

Herkömmliche MABs suchen nach einer einzigen Variante, die für alle Nutzer am besten abschneidet, während kontextuelle Bandits gewinnbringende Varianten auf der Grundlage von Nutzerprofilen wie Gerätetyp, Standort, Verhaltensweisen, Kaufhistorie und mehr ermitteln.

Lassen Sie uns vergleichen:

- A/B-Testing: Feste Aufteilung des Datenverkehrs, bei der die Besucher nach dem Zufallsprinzip verschiedenen Varianten zugewiesen werden, wobei jede Person nur ein einziges Erlebnis sieht und auf statistische Signifikanz wartet.

- Mehrarmige Banditen: Optimiert für eine einzige Variante, die am besten abschneidet. Verteilt den Traffic dynamisch, sucht aber nach einem "Gewinner".

- Kontextuelle Banditen: Personalisiert für einzelne Benutzer auf der Grundlage des Kontexts. Unterschiedliche Nutzer erhalten unterschiedliche Erlebnisse, je nachdem, was für ihr Profil am wahrscheinlichsten eine Konversion bewirkt.

Jedes verpasste optimale Erlebnis ist eine verpasste Gelegenheit zur Conversion. Beim A/B-Testing werden die Gewinner aus einem bestimmten Segment verallgemeinert. MABs verbessern dies, suchen aber immer noch nach einer "besten" Variante für alle.

Contextual Bandits bieten jedem Besucher die beste Variante für ihn in diesem Moment. Wenn sich Profile ändern, ändert sich auch die relevante Variante. Wenn ein Besucher bei einem Produkt konvertiert, sieht er bei seinem nächsten Besuch ein verwandtes Produkt, nicht dasselbe, und erhöht so die Wahrscheinlichkeit einer erneuten Konvertierung.

Wie kontextuelle Bandits funktionieren

Contextual Bandits gleichen die Auswirkungen auf Ihre primäre Metrik und die Benutzerattribute aus, um dynamisch die relevanteste Variante für jeden Besucher in diesem speziellen Moment zu verteilen.

Hier ist eine vereinfachte Erklärung:

- Lernphase: Das Modell beginnt mit einer 100-prozentigen Exploration, bei der den Besuchern nach dem Zufallsprinzip Variationen zugewiesen werden, um verschiedene Daten für Vorhersagen zu sammeln.

- Gleichgewicht zwischen Erkundung und Nutzung: Sobald genügend Verhaltensdaten der Besucher gesammelt wurden, beginnt das Modell mit der Auswertung (Bereitstellung personalisierter Varianten). Es passt die Erkundungs-/Ausnutzungsraten dynamisch an, wenn es mehr Ereignisse erhält.

- Kontinuierliche Anpassung: Das Modell behält ein gewisses Maß an Erkundung bei (maximal 95 % Ausnutzung), um kontinuierliches Lernen zu gewährleisten und zu vermeiden, dass Gelegenheiten verpasst werden.

Die Auswahl der richtigen primären Metrik ist von entscheidender Bedeutung, da die Auswirkungen auf sie die Modellverteilung beeinflussen. Daher wird empfohlen, sie so nah wie möglich an der Stelle zu verfolgen, an der der kontextbezogene Bandit läuft, idealerweise auf derselben Seite.

Die Benutzerattribute sind ebenso wichtig. Je vollständiger Ihre Attribute sind (gekaufte, angesehene Produkte, durchsuchte Kategorien usw.), desto besser wird Ihr Modell funktionieren. Das Modell von Optimizely unterstützt sofort eine unbegrenzte Anzahl von Attributen aus standardmäßigen (clientseitigen), benutzerdefinierten (API) und externen (Drittanbieter-) Quellen.

Anwendungsfälle für Contextual Bandits

Hier finden Sie Beispiele für breitere Branchenanwendungen:

- Einzelhandel: Homepage-Produktkarussells, personalisiert nach Kaufhäufigkeit und Kaufhistorie.

- Medien: Vorschläge für Homepage-Inhalte (Sport, Serien, Filme) auf der Grundlage von Sehgewohnheiten und Geräten.

- Software: Hervorhebungen auf dem Dashboard, die auf die Rolle des Benutzers und sein Nutzungsverhalten zugeschnitten sind.

Aber gibt es denn schon konkrete Beispiele, fragen Sie?

Unsere Beta-Teilnehmer implementieren bereits und sehen Ergebnisse:

- Ein Finanzdienstleister nutzt kontextbezogene Banditen auf der Homepage, um relevante Bankprodukte auf der Grundlage der Kundenhistorie anzubieten.

- Eine Pizzarestaurantkette verwendet kontextabhängige Bandits auf der Checkout-Seite, um Add-ons auf der Grundlage des Inhalts des Warenkorbs vorzuschlagen.

- Ein Telekommunikationsunternehmen verwendet kontextbezogene Bandits auf der Profilseite, um Upsell-Angebote auf der Grundlage aktueller Abonnements zu präsentieren.

Das Digitalteam von Optimizely verwendet ebenfalls kontextabhängige Bandits. Sie verwenden CMABs auf unserer Homepage, um Besuchern Produkte auf der Grundlage ihres Unternehmens, ihrer Rolle, ihrer Branche und ihres Standorts zuzuordnen.

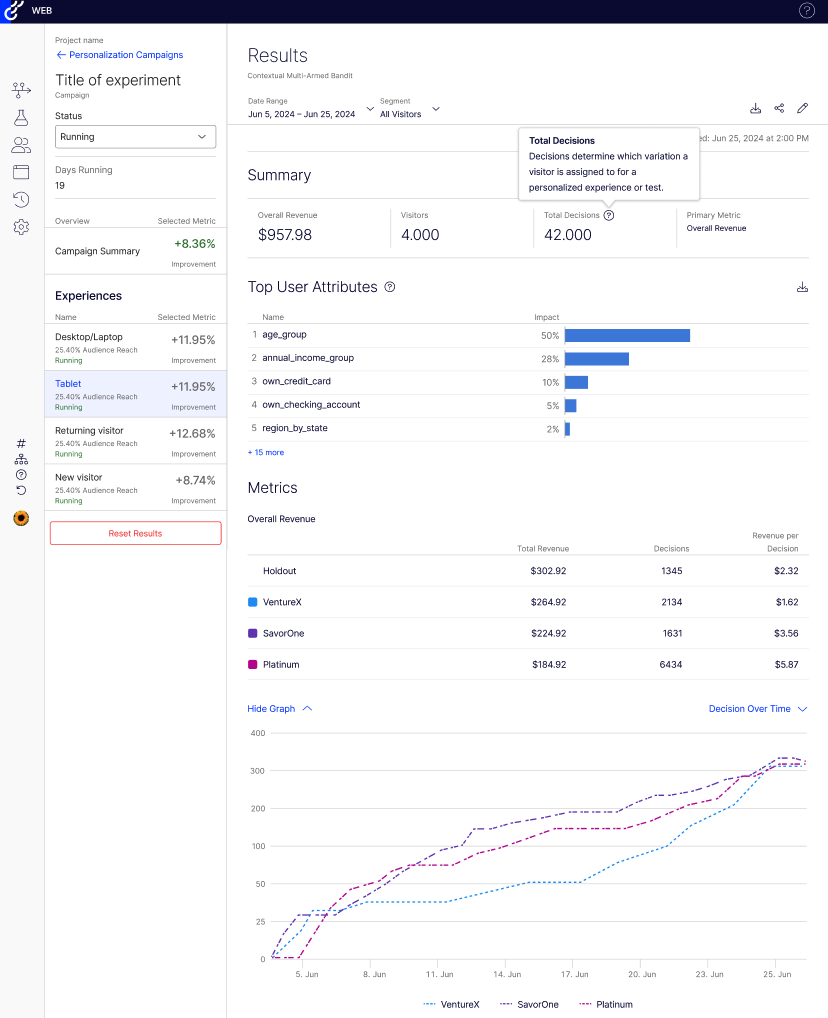

Hier sind einige erste Ergebnisse:

- 13,62% höheres Engagement bei Targeting-Inhalten

- 3,37% Verbesserung bei der Marketingplanung

- 20,79% Verbesserung bei der Validierung durch Tests

Das Team sagt, dass es auf breiter Front gut funktioniert.

Haftungsausschluss: Hier sehen Sie eine frühe Vorschau der Optimizely Contextual Bandits Ergebnisseite und wie sie später aussehen wird

Bildquelle: Optimizely

Vorteile der Implementierung kontextbezogener Bandits

CMABs liefern einen erheblichen Geschäftswert durch:

- Bereitstellung wirklich personalisierter Erlebnisse für jeden Benutzer: Anstelle von Einheitslösungen liefern CMABs den richtigen Inhalt für die richtige Person zur richtigen Zeit.

- Höhere Conversion Raten bei der primären Metrik: Indem CMABs den Nutzern zeigen, worauf sie am ehesten reagieren werden, sorgen sie für mehr Engagement und Conversion Rate.

- Dynamische Anpassung an Änderungen im Besucherverhalten: Das System bietet in jeder Sitzung die beste Variante an, auch wenn sich die Vorlieben der Nutzer ändern.

- Eliminierung der Opportunitätskosten herkömmlicher Tests: Im Gegensatz zu A/B-Tests, bei denen es Wochen oder Monate dauert, bis eine statistische Signifikanz erreicht wird, beginnen die Contextual Bandits sofort mit der Optimierung, so dass Sie sich in Echtzeit weniger leistungsschwachen Varianten aussetzen.

- Minimaler Pflegeaufwand: CMABs sind ideal für Seiten, deren Inhalt sich nicht allzu häufig ändert. Im Laufe der Zeit wird das ML-Modell mit den gesammelten Daten immer schärfer, so dass Sie diese Optimierung kontinuierlich laufen lassen können.

CMABs erhöhen die Wahrscheinlichkeit einer Conversion, wirken sich positiv auf den ROI aus und eliminieren die Opportunitätskosten, die bei A/B-Testing oder traditionellen Bandits anfallen.

Optimizelys Implementierung des kontextuellen Bandits: Was sie anders macht

Hier sehen Sie, wie wir die Dinge anders angehen:

- Erweiterte baumbasierte Modelle: Wir haben Modelle sowohl für binäre Klassifizierungs- als auch für Regressionsaufgaben entwickelt, die unser System flexibel und anpassungsfähig für verschiedene Datentypen und Experimentaufbauten machen.

- Einblicke in die Bedeutung von Merkmalen: Unser System misst die Auswirkung von Attributen und zeigt die Wichtigkeit von Merkmalen an, so dass Sie erkennen können, welche Attribute die Conversions fördern.

- Duales Modell & inkrementelles Lernen: Wir behandeln alle Vorhersagetypen mit spezialisierten Modellen, die aus neuen Daten weiter lernen, ohne bei Null anzufangen.

- Dynamische Verarbeitung von Merkmalen: Unser Preprocessing konvertiert automatisch Merkmale und behandelt Datenprobleme. Mit XGBoost erstellen wir mehrere einfache Bäume, die aus Fehlern lernen, anstatt eines komplexen Baums, und verhindern eine Überanpassung durch Regularisierung und andere Techniken.

- Integration mit dem breiteren Ökosystem: Unsere CMAB-Implementierung arbeitet nahtlos mit der Optimizely Suite für Experimentieren und Personalisierung zusammen, so dass Sie Ihre Strategie ohne zusätzliche Tools oder Komplexität verbessern können.

Die Zukunft der Personalisierung ist kontextabhängig

In einer Welt, in der es keine Einheitsgröße gibt, ist der Kontext entscheidend.

Da der Wettbewerb um die Aufmerksamkeit zunimmt, funktionieren statische Ansätze zur Personalisierung einfach nicht mehr. Es werden die Marken gewinnen, die wirklich relevante Erlebnisse bieten und sich kontinuierlich an die sich ändernden Verhaltensweisen und Vorlieben ihrer Kunden anpassen können.

Sind Sie bereit zu erfahren, wie Sie mit kontextuellen Banditen besseres Engagement, bessere Konversionsraten und höhere Kundenzufriedenheit erreichen können?

Sehen Sie sich diese 2-minütige Navattic-Tour an, um zu erfahren, wie kontextbezogene Bandits auf der Plattform aussehen.