The only guide you need to choose a statistical model for your experimentation program

A statistical model can influence the interpretation, speed, and robustness of your experimentation results. The best approach will depend on the specifics of the experiment, available info, and the desired balance between speed and certainty.

Most testing advice gets it all wrong...

You’ve probably heard it...

“Sequential testing is always better.”

“Fixed-horizon is the gold standard.”

“Bayesian is the future.”

I've seen companies follow this advice and still make bad decisions.

The truth? There’s no one-size-fits-all method. Your statistical approach should match your business reality, not a textbook ideal. It isn't about mathematical elegance, it's about aligning with your specific business constraints, timeline needs, and decision-making processes.

Today, I'm going to show you exactly how to select the right statistical approach for your specific situation, no universal proclamations, just practical decision frameworks that work in the real world.

What this guide will cover

- Core statistical approaches and when each shines (not which one is "better")

- Real business impacts of your statistical choice (the "so what" that matters)

- Practical decision framework for selecting your approach (no more guessing)

- Common pitfalls and how to avoid them (learn from others' mistakes)

By the end of this guide, you'll be equipped to make the right choice for your program.

The two schools of statistical thought

At the highest level, there are two fundamental statistical philosophies:

1. Frequentist statistics

Interprets probability as the long-run frequency of events. When someone talks about "p-values," "statistical significance," or "95% confidence intervals," they're speaking frequentist.

This is like saying: "If we ran this experiment 100 times, we'd see this result or something more extreme fewer than 5 times by pure chance."

Error control in frequentist approaches focuses on managing two types of errors:

- Type I error (false positive): Concluding there's an effect when there isn't one. Like saying your new feature improved conversion when it actually didn't.

- Type II error (false negative): Missing a real effect. Like concluding your feature had no impact when it did improve conversion.

In practice, frequentists typically control Type I error (set at 5% in most experiments) while Type II is managed through statistical power (typically 80%).

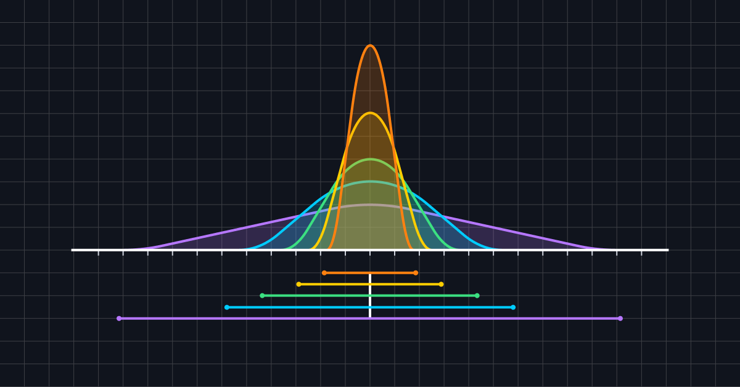

2. Bayesian statistics

Treats probability as a degree of belief. Instead of asking "What's the chance of seeing this data if there's no effect?" (frequentist), it asks "What's the probability there's a real effect, given this data?"

In Bayesian terms, it's like saying: "Based on what we know and what we've observed, there's an 80% chance this variant is better."

Error control in Bayesian approaches is fundamentally different. Rather than controlling error rates across hypothetical repeated experiments, Bayesians express uncertainty directly through probability distributions.

Instead of binary decisions with error rates, Bayesians might say: "There's a 95% probability that the true effect lies between 2% and 5%," providing a more intuitive framework for decision-making under uncertainty.

The three main testing approaches

Let's break down the key approaches and when each one shines:

1. Fixed-horizon testing: The traditional workhorse

What it is: The statistics you learned in school. You calculate a sample size upfront, run until you reach it, and never peek at results before the end.

When it shines:

- Scientific/regulated environments

- Predictable timelines and traffic

- Well-understood effect sizes

- Regulatory compliance contexts

Business impact: Like using a sledgehammer to hang a picture. Reliable and efficient for digital experimentation.

The science behind it: Controls false positives (Type I error) at exactly 5%, if you follow the rules perfectly. But peek early or extend the test, and your false positive rate can spike to 20-30% or in some cases, even 100%

Example: While many scientific studies use fixed-horizon designs, even highly regulated industries like pharmaceuticalsoften incorporate sophisticated interim analyses into their trial designs. However, these interim looks must be pre-planned and account for multiple tests to maintain statistical validity.

2. Frequentist sequential: making "peeking" valid

What it is: A testing approach that allows for continuous monitoring with statistically valid early stopping. While some sequential methods use frequentist terms (p-values, confidence intervals), they don't fit neatly into the traditional frequentist paradigm.

When it shines:

- Digital experimentation with variable traffic

- When you need flexibility to stop tests early

- When opportunity costs of running unnecessarily long tests are high

Business impact: Allows you to be more agile with your testing program while maintaining statistical rigor.

The science behind it: Methods like mSPRT adjust evidence thresholds based on how often you check results, maintaining valid error control throughout the experiment.

Example: An e-commerce company can quickly halt a test when a new checkout flow shows significant conversion drops, minimizing revenue loss.

The trade-off: Takes longer to conclude when there's truly no effect. The price of flexibility when effects are real.

3. Bayesian testing: The intuitive alternative

What it is: A different approach that incorporates prior knowledge and directly states the probability that a variant is better.

When it shines:

- Limited sample sizes

- Historical data available

- Explaining results to stakeholders

Business impact: More intuitive results ("80% chance variant B is better") and potentially faster decisions with good prior information.

The science behind it: Combines prior beliefs with observed data to create probability distributions about effect sizes. The quality of your priors dramatically impacts results.

Example: A product team with extensive historical data can incorporate that knowledge into new tests, reaching decisions with smaller sample sizes.

Misconception: Neither Bayesian methods nor frequentist eliminate false positives, they express uncertainty differently and may identify more "winners" because they use different error control mechanisms.

Quick reference: Choosing your approach

Need to make a decision fast? Here's your cheat sheet:

| Approach | When to use | Advantages | Limitations |

| Fixed-horizon |

|

|

|

| Sequential |

|

|

|

| Bayesian |

|

|

|

Decision framework for your statistical approach

Forget asking "Which method is better?" That's the wrong question entirely.

Instead, ask these questions to find the right approach for your specific situation:

1. What are your business constraints?

- Do you need to detect effects as quickly as possible?

- Is your sample size limited?

- How important is interpretability to non-technical stakeholders?

- How crucial is it to minimize exposure to potential negative experiences?

2. What data advantages do you have?

- Do you have reliable historical data on similar tests?

- How variable are your metrics?

- How many metrics and variations are you testing simultaneously?

- Do you have domain experts who can provide informed priors?

3. What are your error control priorities?

- Is limiting false positives critical to your business?

- Are false negatives (missed opportunities) more costly?

- Do you need probability statements about effects?

- What's the cost of a wrong decision in either direction?

4. What's your organizational context?

- Do you need to follow established scientific protocols?

- How mature is your experimentation program?

- What tools do you have available?

- What's the statistical literacy of your stakeholders?

Common mistakes that you can avoid...

I've seen teams make the same mistakes over and over with each approach. Here's how to avoid them:

1. Fixed-horizon testing

- Peeking at results before reaching your predetermined sample size (this invalidates your entire test)

- Extending tests beyond the planned sample size when results aren't significant ("optional stopping" dramatically increases false positives)

- Using arbitrary sample sizes instead of proper power calculations (leads to underpowered or wasteful tests)

- Ignoring business constraints in favor of statistical purity (the perfect is the enemy of the good)

2. Sequential testing

- Expecting quick results for tests with minimal or no effects (sequential testing takes longer when there's no effect)

- Misunderstanding power implications for tests with small effects (sequential tests have different power characteristics)

- Over-relying on early results without considering business impact (statistical significance ≠ business significance)

- Using the wrong sequential method for your specific needs (not all sequential approaches are created equal)

3. Bayesian testing

- Think of priors as your informed guess before you run the test. It’s like betting with some past experience in your back pocket.

- Misinterpreting posterior probabilities in business contexts

- Neglecting sensitivity analysis of your prior choices (how much do your priors influence your conclusions?)

However, your choice of statistical approach is just the foundation. There are techniques that can improve your results.

- Variance reduction: Techniques like CUPED (Controlled-experiment Using Pre-Existing Data) or outlier management can dramatically reduce the sample size needed across all approaches by incorporating pre-experiment data. CUPED is approach-agnostic and compatible with fixed-horizon, sequential, and Bayesian methods.

- Multiple testing correction: If you're testing multiple metrics or variations simultaneously, you need to account for the increased chance of false positives.

There are two main approaches:

- Family-wise error rate (FWER): It controls the probability of making even one false discovery.

- False discovery rate (FDR): It controls the expected proportion of false discoveries among all discoveries.

Each serves different purposes and comes with different tradeoffs in statistical power. The key is knowing which one aligns with your business objectives.

Optimizely's approach...

Stats Engine deploys a novel algorithm called the mixture sequential probability ratio test (mSPRT).

It compares after every visitor how much more indicative the data is of any improvement / non-zero improvement, compared to zero / no improvement at all. This is the relative plausibility of the variation(s), compared to the baseline.

The mSPRT is a special type of statistical test that improves upon the sequential probability ratio test (SPRT), first proposed by theoretical statistician David Siegmund at Stanford in 1985. That OG sequential probability ratio test from Siegmund was designed to test exact, specific values of the lift from a single variation in comparison to a single control by comparing the likelihood that there is a non-zero improvement in performance from the variation versus zero improvement in performance from the baseline.

Specifically, Optimizely's mSPRT algorithm averages the ordinary SPRT across a range of all possible improvements (for example, alternative lift values).

Optimizely’s stats engine also employs a flavor of the Empirical Bayesian technique. It blends the best of frequentist and Bayesian methods while maintaining the always valid guarantee for continuous monitoring of experiment results.

Stats Engine takes more evidence to produce a significant result, which allows experimenters to peek as many times as they desire over the life of an experiment. Stats Engine also controls your false-positive rates at all times regardless of when or how often you peek, and further adjusts for situations where your experiment has multiple comparisons (i.e., multiple metrics and variations).

Controlling the False Discovery Rate offers a way to increase power while maintaining a principled bound on error. Said another way, the False Discovery Rate is the chance of crying wolf over an innocuous finding.

Therefore, the Stats Engine permits continuous monitoring of results with always valid outcomes by controlling the false positive rate at all times regardless of when or how often the experimenter peeks at the results.

Wrapping up...

There is no "best" statistical approach, only the right tool for your situation.

Most companies don't need to obsess over the perfect statistical implementation. They need to:

- Choose an approach that fits their business constraints

- Implement it correctly

- Use it consistently

- Focus on asking the right questions

The goal isn't statistical purity. It's making good business decisions efficiently based on reliable evidence.

Remember, no statistical method will save you from a bad experiment design or irrelevant metrics. The best statistics in the world can't fix a test that doesn't matter to your users.

Ready to plan your next experiment?

Try our sample size calculator to ensure you're collecting enough data for reliable results.