Introduction

“What’s the revenue impact of these experiments?”

If you’ve heard this question in a meeting, you’re not alone.

Most experimentation programs start strong, racking up win rates and quick wins – but stall when showing clear ROI. Leadership doesn’t want slides with stats. What they want to see is a clear impact on revenue, retention, and growth.

The difference between good and great programs isn’t tools or talent. It’s how you scale.

The highest-performing experimentation teams master four key pillars:

- They tie metrics to business value

- Increase test velocity without losing quality

- Go beyond A/B testing into advanced methods

- Optimize digital experiences across the customer journey

This guide walks you through how to do it, so your experimentation doesn’t just grow, it performs.

Why most experimentations stall (and how to fix it)

Before you scale your experimentation program it’s critical to understand the current and future challenges that could impact on this growth.

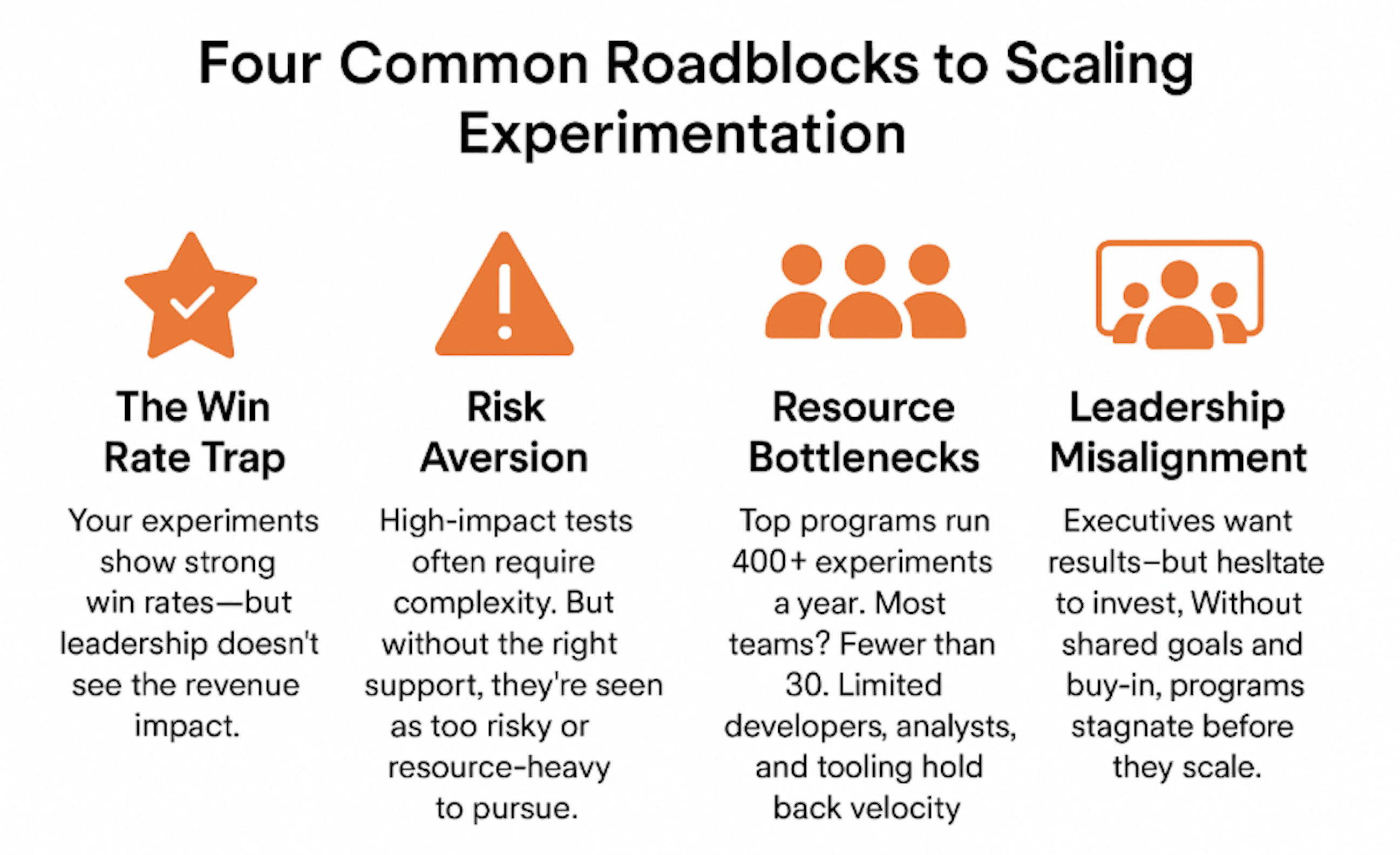

We see these four common roadblocks consistently preventing teams from achieving velocity and ROI.

Image source: Optimizely

The four pillars for scaling experimentation

Scaling your experimentation program requires mastery in four critical areas (metrics, velocity + quality, advanced testing techniques and building better digital experiences). These pillars work together as an integrated system, strengthening one improves the others.

The most successful experimentation programs don't excel in just one area. They build capability across all four pillars simultaneously.

"The difference between good and great experimentation isn't tools or talent, it's a systematic approach across metrics, velocity, techniques, and culture."

Use experimentation metrics that drive real revenue

Many teams default to experimentation metrics that are easy to measure, but not the ones that actually move the needle.

Over 90% of experiments target 5 common metrics: CTA clicks, Revenue, Checkout, Registration, Add-to-cart.

Our analysis of 127,000+ experiments reveals a surprising truth: 3 out of the 5 most commonly used metrics have the lowest impact on business outcomes.

Could you accidentally be ignoring metrics that can make a difference? For example, search optimization shows a 2.3% expected impact but is used in only 1.3% of experiments.

High-impact opportunities are being missed.

To scale experimentation that delivers ROI, your metrics need to be predictive, actionable, and aligned with long-term value, not just short-term wins.

Mark Wakelin, Principal Consultant, Strategy & Value, discusses what metrics you should choose for you program.

Build your experimentation metrics framework

Not all metrics are created equal. High-performing experimentation programs use a clear measurement strategy that connects every test to business outcomes.

✅ Prioritize what matters

- Primary: Revenue-focused metrics (conversion rate, AOV)

- Secondary: Behavioral indicators (add-to-cart, engagement, abandonment)

- Guardrails: UX protectors (load time, error rates)

- Journey: Multi-step behaviors (return visits, checkout flows)

✅ Map to the funnel: Track performance across awareness, consideration, decision, and retention stages.

✅ Measure with confidence: Use event tracking, set sample size and statistical thresholds, and apply 95% confidence intervals.

✅ Align with business goals: Optimize for what drives impact, whether that’s LTV, efficiency, satisfaction, or market share.

Scale test velocity without losing quality or impact

Are you running enough experiments, or too many?

Our data shows that the median company runs just 34 experiments a year:

- Median company: 34 experiments annually

- Top 10%: 200+ experiments annually

- Top 3%: 500+ experiments annually

The common myth: Running more tests automatically leads to better results.

The data tells a different story: Our analysis reveals that impact per test peaks at 1-10 annual tests per engineer. Beyond 30 tests per engineer, the expected impact drops by a staggering 87%.

Remember...

Volume at the cost of quality can harm performance. The key is test prioritization.

Use the PIE framework to score each idea:

- Potential impact (1-10)

- Importance to business (1-10)

- Ease of implementation (1-10)

Team structure: Start with core roles (Experimentation Lead, Product Manager, Developer) before expanding to specialists.

Here’s how to build your experimentation team from scratch.

Democratization of experimentation that drives bigger wins

Most experiments today are simple A/B tests, and that’s a missed opportunity.

Our data shows:

- 77% of experiments test only two variations (A/B)

- Yet tests with 4+ variations are 2.4x more likely to win

- They also deliver 27.4% higher uplifts

To move from incremental improvements to breakthrough results, you need to democratize experimentation and keep your developers sane.

Here's Sathya Narayanan, Director, Product Management on the need for democratization of experimentation and what it actually means.

Scaling also means going beyond basic tests to advanced strategies that uncover deeper insights and unlock scalable impact.

8 top tips to expand your testing arsenal:

- Multivariate testing: Test multiple variables at once to uncover interactions across headlines, CTAs, layout, and more.

- Server-side testing: Run experiments directly on your backend to test logic, functionality, or performance—not just UI changes.

- Feature flags: Launch features safely with controlled rollouts.

- Multi-armed bandits: Automatically shift traffic to the best-performing variations in real-time to maximize gains.

- CUPED (Controlled-experiment using pre-experiment data): Improve test sensitivity by reducing variance using historical data.

- Contextual bandits: Tailor experiences dynamically based on user context (e.g., device type, behavior, location).

- Ratio metrics: Create custom metrics by defining a denominator event (e.g., revenue per engaged user).

- Painted door tests: Validate interest in a new feature before building it - show the door, see who clicks.

Advanced testing isn’t just for data science teams. With the right tools and strategy, any program can scale smarter and uncover better insights.

Optimize digital experiences across the customer journey

Most companies run experiments on individual pages or elements but fail to optimize the complete customer journey, which limits their ROI potential:

Here's why:

- Disconnected workflows: Teams struggle with fragmented processes. Only 29% of employees are satisfied with workplace collaboration (Gartner, 2024).

- Resource bottlenecks: Development and data science expertise are scarce, often delaying optimization efforts by weeks.

- Fragmented experiences: Customers expect consistency across web, mobile, email, and social media.

- Data Silos: Surface metrics fail to connect to business outcomes like revenue and lifetime value.

- Delayed insights: 43% of executives are concerned about their current infrastructure’s ability to handle future data volumes.

This is why teams are moving beyond isolated experiments to a holistic approach for digital experiences powered by the four accelerators: Collaboration, Personalization, Analytics, and AI.

Together, these capabilities turn experimentation from tactical testing into strategic business growth.

The four accelerators

Personalization, Analytics, AI, and Collaboration.

1. Resonate with your audience through personalization

One size fits all is no longer a viable digital marketing approach. You can't just push the same website recommendations to a broad audience.

Ask yourself: Do you even resonate?

Personalized experiences generate a 41% higher impact compared to general experiences.

Still, most digital businesses avoid personalization due to resource constraints, uncertainty about customer preferences, and the complexity of implementing tailored experiences.

Half of all experiments today use a personalization strategy (here’s how to build one).

When personalizing, keep in mind:

- WHAT: The change you want to make in the default digital experience

- WHO: The specific user or group you want to deliver it to

- WHY: If the change meets the original objective/goal

- WHERE: The platform you’ll use to create a personalized experience

It's all about creating customer journeys that provide a comprehensive view from the customers' perspective.

Here's Nicola Ayan, VP, Solution Strategy discussing where to get started with personalization.

However, the issue is that out of teams running personalized experiments, only 31% believe it's improving their bottom line.

But, here's how you can measure personalization ROI.

2. Connect experiments to business impact with warehouse-native analytics

Traditional experimentation only tells half the story.

By connecting your experiments directly to your data warehouse, you can:

- Test against true business metrics like customer lifetime value and return rates

- Eliminate data discrepancies between systems

- Maintain security by keeping sensitive data in your warehouse

- Access all your data without complex pipelines or data duplication

When everyone works from the same warehouse data, you eliminate debates about whose numbers are right and focus on insights, not reconciling reports.

Here's Vijay Ganesan, VP of Software Engineering, discussing what warehouse-native analytics actually means.

3. AI in experimentation

In the past, launching a new test was a slow, cumbersome process.

Teams would spend months perfecting their hypotheses, worrying about sample sizes, and manually analyzing results. But with AI, that approach feels as outdated as dial-up modems.

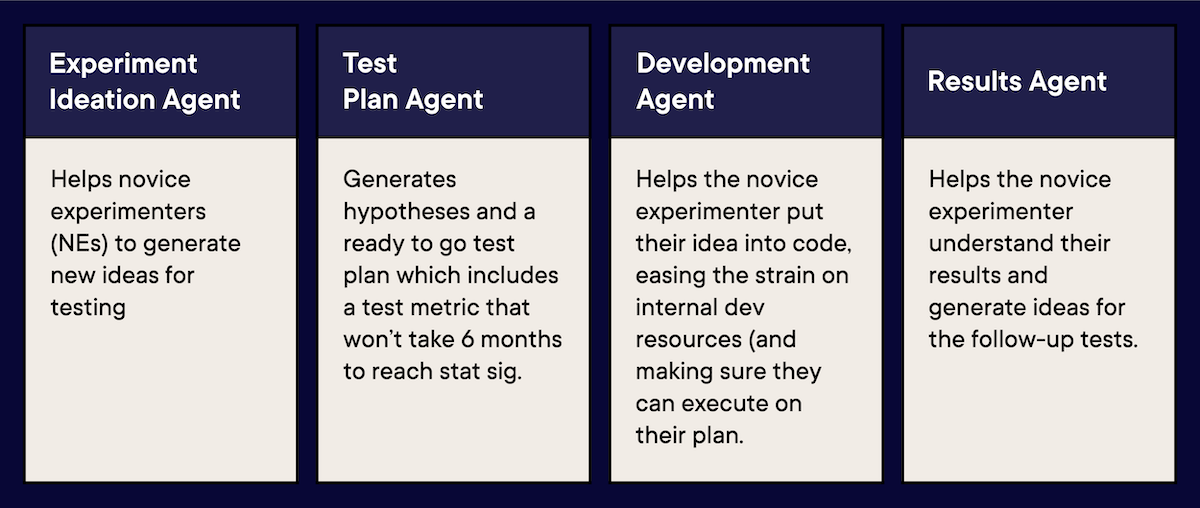

Image source: Optimizely

Image source: Optimizely

How AI experimentation is improving the experimentation process:

- AI variation generation: AI doesn't just suggest one-off changes, it recommends complete experiments with multiple variations built-in.

- Intelligent metric selection: For experienced teams, AI provides guidance warning you if a metric will take too long to reach significance. For newer users, AI will jump right into suggesting a test plan with ready-to-go metrics.

- Optimization: Instead of waiting weeks for results, AI continuously monitors test performance and adjusts traffic allocation.

- Problem definition: AI in product development identifies recurring issues, turning them into structured requirements for better features.

Here's a live example of how you can already use AI for generating test summaries:

What you get is insights that are easy for everyone to understand and take the next step.

4. Collaboration

Where we work has changed, but how we work has not. Teams lose valuable ideas in scattered messages and struggle to coordinate across time zones.

Collaboration’s three principles improve how teams run tests:

- Structure beats improvisation: Standardized forms, clear workflows, and explicit review steps ensure quality.

- Written beats verbal: Document ideas as they happen, giving everyone equal voice regardless of location.

- Widespread input beats small groups: Share early, get diverse feedback, and create transparent processes.

Here's Sathya Narayanan, Director of Product Management discussing how Collaboration improves the performance of an experimentation program.

Bringing it all together: A scalable experimentation framework

Your experimentation program is like an engine where all parts work together. Here's your scaling checklist:

- Metrics that matter: Connected to business outcomes and revenue impact

- Strategic velocity: Quality experiments that balance resources and impact

- Advanced testing techniques: Moving beyond simple A/B to multi-variation testing

- Digital experience optimization: Integrated approach to customer journeys

- The four accelerators: Personalization, Analytics, AI, and Collaboration make it all work together.

Scaling isn't about running more tests.

It's about running smarter tests that drive real business value. It's experimentation that leadership doesn't question but rather champions.

Want to dive deeper?

- Full report from 127k experiments: Evolution of Experimentation

- How top brands combine the four accelerators: The big book of experimentation