A/B-testing

Hva er A/B-testing?

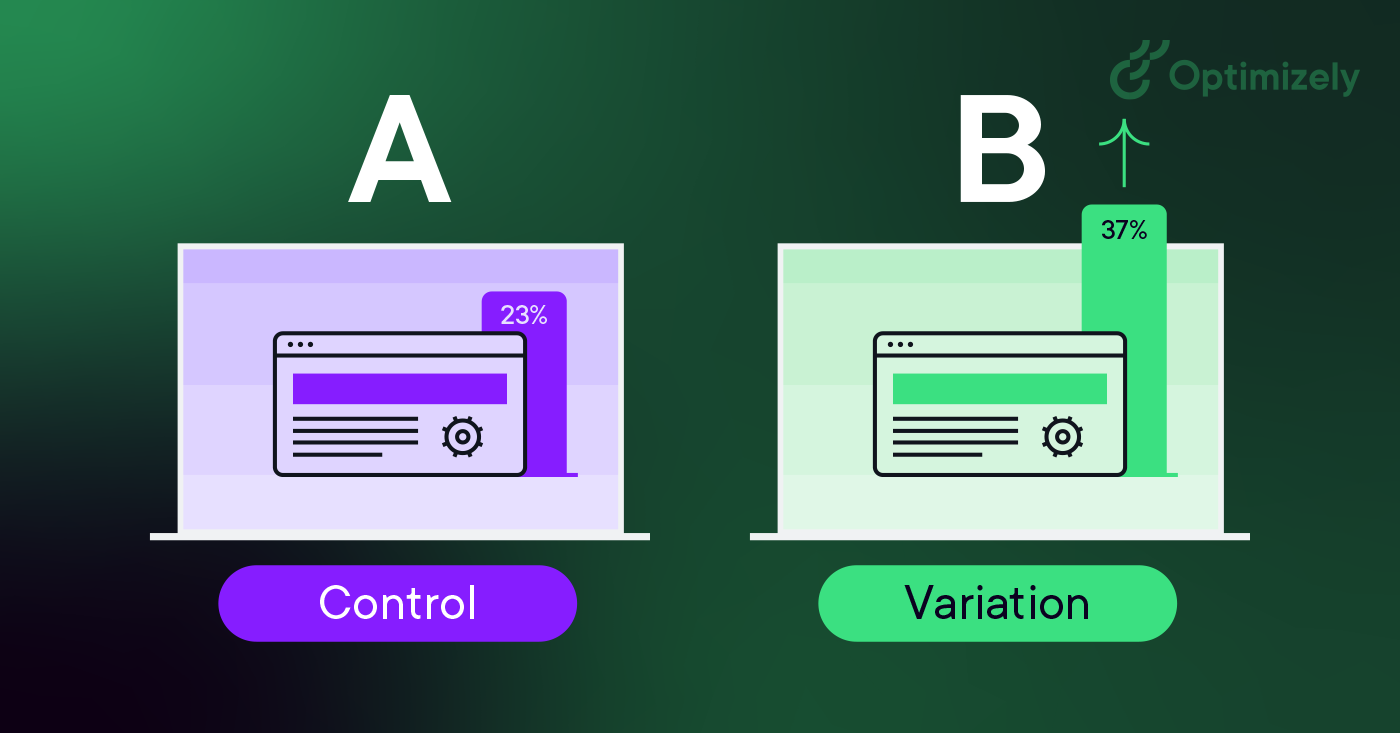

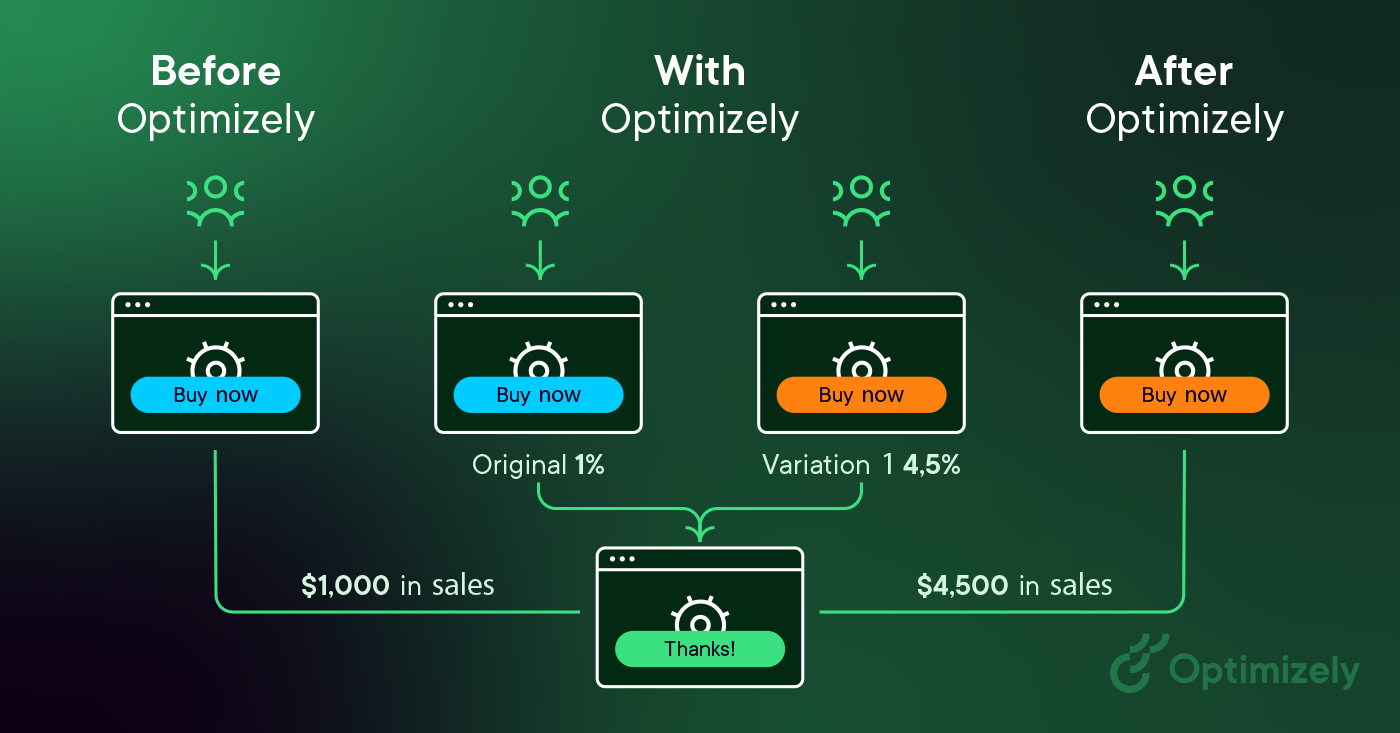

A/B-testing (også kjent som split testing eller bucket testing) er en metode for å sammenligne to versjoner av en nettside eller app opp mot hverandre for å finne ut hvilken som gir best resultater. Metoden går ut på å vise to tilfeldige varianter av en side til brukerne og bruke statistisk analyse for å finne ut hvilken variant som gir best resultater i forhold til konverteringsmålene dine.

I praksis er det slik A/B-testing fungerer:

- Opprett to versjoner av en side - den opprinnelige (kontroll eller A) og en modifisert versjon (variasjon eller B)

- Del trafikken tilfeldig mellom disse versjonene

- Måling av brukerengasjement gjennom et dashbord

- Analysere resultatene for å finne ut om endringene hadde positive, negative eller nøytrale effekter

Endringene du tester, kan være alt fra enkle justeringer (som en overskrift eller en knapp) til fullstendig redesign av siden. Ved å måle effekten av hver endring gjør A/B-testing nettstedoptimalisering fra gjetning til datainformerte beslutninger, og samtalen går fra "vi tror" til "vi vet".

Etter hvert som de besøkende får servert enten kontrollopplevelsen eller variasjonen, måles og samles engasjementet deres med hver opplevelse i et dashbord og analyseres gjennom en statistisk motor. Deretter kan du avgjøre om en endring av opplevelsen (variasjon eller B) hadde en positiv, negativ eller nøytral effekt i forhold til baseline (kontroll eller A).

"Konseptet med A/B-testing er enkelt: Vis forskjellige varianter av nettstedet ditt til forskjellige personer, og mål hvilken variant som er mest effektiv når det gjelder å gjøre dem til kunder."

Dan Siroker og Pete Koomen (bok | A/B Testing: The most powerful way to turn clicks into customers)

Hvorfor du bør A/B-teste

A/B-testing gjør det mulig for enkeltpersoner, team og bedrifter å gjøre forsiktige endringer i brukeropplevelsene sine samtidig som de samler inn data om effekten det har. På denne måten kan de konstruere hypoteser og finne ut hvilke elementer og optimaliseringer av brukeropplevelsen som påvirker brukeratferden mest. På en annen måte kan de ta feil - deres mening om den beste opplevelsen for et gitt mål kan bli motbevist gjennom en A/B-test.

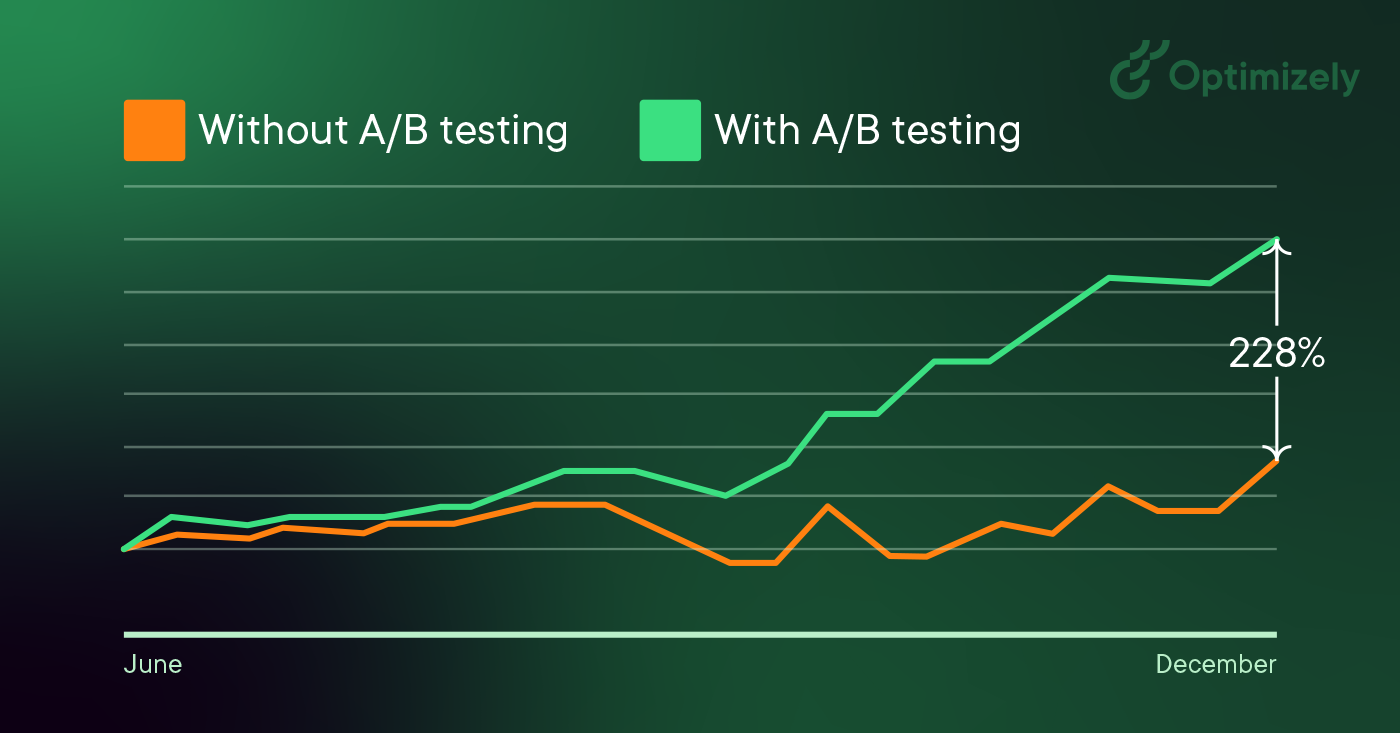

A/B-testing kan ikke bare brukes til å svare på et enkeltstående spørsmål eller avgjøre en uenighet, men kan også brukes til å kontinuerlig forbedre en gitt opplevelse eller et enkelt mål, for eksempel optimalisering av konverteringsfrekvensen (CRO), over tid.

Eksempler på bruksområder for A/B-testing:

- Generering av B2B-leads: Hvis du er et teknologiselskap, kan du forbedre landingssidene dine ved å teste endringer i overskrifter, skjemafelt og CTA-er. Ved å teste ett element om gangen kan du identifisere hvilke endringer som øker kvaliteten på leads og konverteringsraten.

- Kampanjeytelse: Hvis du driver en produktmarkedsføringskampanje, kan du optimalisere annonsebruken ved å teste både annonsetekster og destinasjonssider. Ved å teste ulike oppsett kan du for eksempel identifisere hvilken versjon som konverterer besøkende til kunder mest effektivt, noe som reduserer de totale kostnadene ved å skaffe kunder.

- Produktopplevelse: Produktteamene i bedriften din kan bruke A/B-testing til å validere antakelser, prioritere viktige funksjoner og levere produkter uten risiko. Fra onboardingflyter til produktvarsler - testing bidrar til å optimalisere brukeropplevelsen samtidig som man opprettholder klare mål og hypoteser.

A/B-testing bidrar til å forvandle beslutningstaking fra meningsbasert til datadrevet, og utfordrer HiPPO-slang (Highest Paid Person's Opinion).

Som Dan Siroker bemerker: "Det handler om å være ydmyk ... kanskje vi faktisk ikke vet hva som er best, men la oss se på data og bruke dem til å veilede oss."

Slik utfører du A/B-testing

Følgende er et rammeverk for A/B-testing som du kan bruke for å komme i gang med å kjøre tester:

1. Samle inn data

- Bruk analyseverktøy som Google Analytics for å identifisere muligheter

- Fokuser på områder med høy trafikk ved hjelp av varmekart

- Se etter sider med høy drop-off-rate

2. Sett deg klare mål

- Definer spesifikke måltall som skal forbedres

- Fastsett målekriterier

- Sett mål for forbedringer

3. Utarbeide hypoteser for test

- Formuler klare spådommer

- Baser ideer på eksisterende data

- Prioriter etter potensiell effekt

4. Utform variasjoner

- Gjør spesifikke, målbare endringer

- Sørg for riktig sporing

- Test den tekniske implementeringen

5. Kjør eksperimentet

- Del opp trafikken tilfeldig

- Overvåk for å avdekke problemer

- Samle inn data systematisk

6. Analyser resultatene

- Sjekk statistisk signifikans

- Gjennomgå alle måleparametere

- Dokumenter lærdommen

Hvis din variant vinner, er det fantastisk! Bruk denne innsikten på lignende sider, og fortsett å iterere for å bygge videre på suksessen. Men husk - ikke alle tester vil være vinnere, og det er helt greit.

Mer om mislykkede A/B-tester: Hvordan mislykkede tester kan føre deg til suksess

I A/B-testing finnes det ingen virkelige fiaskoer - bare muligheter til å lære. Hver test, enten den viser positive, negative eller nøytrale resultater, gir verdifull innsikt om brukerne dine og bidrar til å forbedre teststrategien din.

Michiel Dorjee, direktør for digital markedsføring, diskuterer hvordan du oppnår statistisk signifikans

Eksempler på A/B-testing

Her er to eksempler på A/B-testing i praksis.

1. A/B-test av hjemmesiden

Felix og Michiel fra det digitale teamet bestemte seg for å legge til en overraskelse med en labb i reklamen og budskapet på hjemmesiden vår.

Målet var å øke brukerengasjementet.

Teamet fant ut at svaret i dette tilfellet var mange vovser.

I løpet av eksperimentet fikk besøkende som klappet hunden på nettsidens hjemmeside, en lenke til vår "Evolution of Experimentation"-rapport.

Du vil imidlertid bare se hunden 50 % av tiden.

Resultat: Folk som ble eksponert for hunden, konsumerte innholdet 3 ganger mer enn de som ikke så hunden.

2. Pop-up til flop-up

Ronnie Cheung , senior strategikonsulent hos Optimizely, ønsket å introdusere en popup-visning av anleggsdetaljer i kartvisningen, for når brukerne klikket på pinner i kartvisningen, ble de ført til en PDP-side som la til et ekstra trinn for å fullføre kassen.

- Resultat: Færre brukere gikk inn på betalingssiden

- Takeaway: Forbedre popup-informasjonen slik at brukerne trygt kan gå videre til kassen.

Hvis du vil se mer, finner du her en bransjespesifikk liste over eksempler på A/B-testing.

Og for suksesshistorier, sjekk ut den store boken om eksperimentering. Den inneholder over 40 casestudier som viser utfordringene og hypotesene som ble brukt for å løse dem.

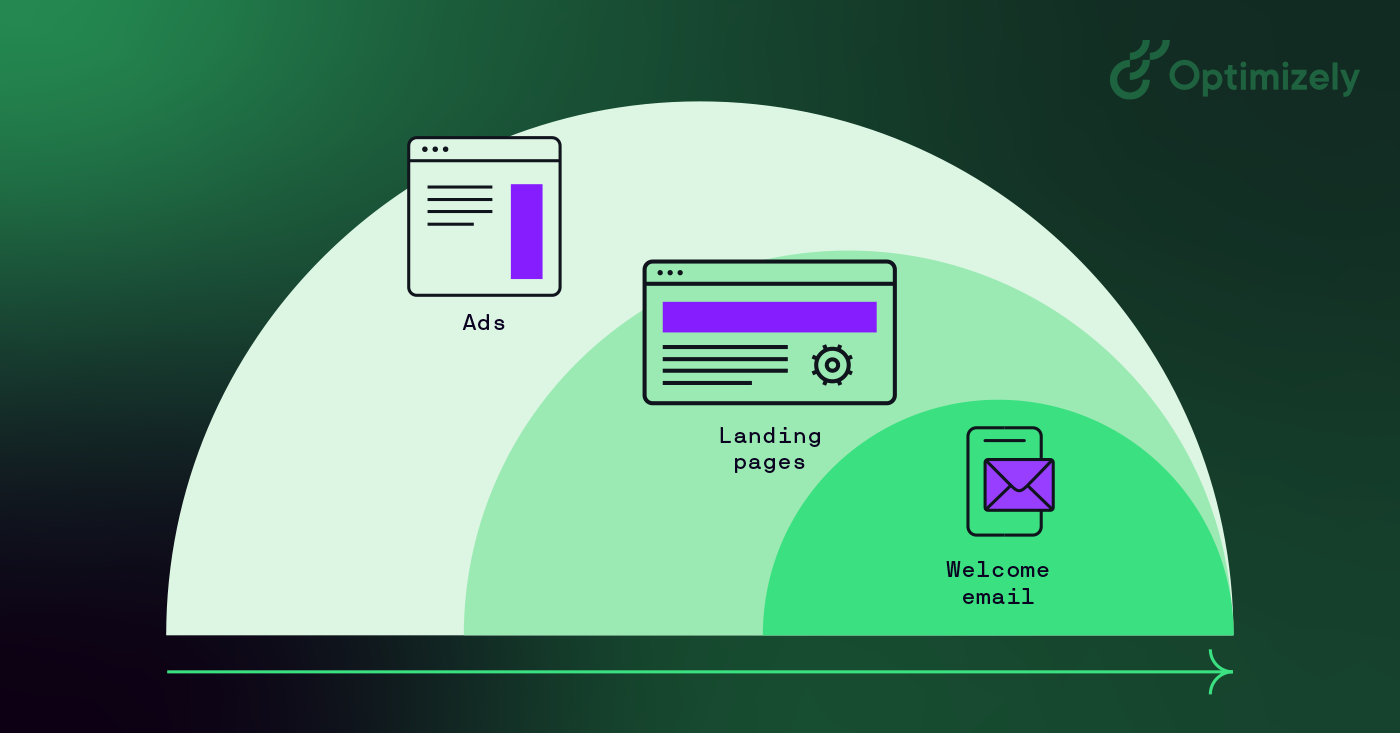

Skape en kultur for A/B-testing

Gode digitale markedsføringsteam sørger for å involvere flere avdelinger i eksperimenteringsprogrammet. Når du tester på tvers av ulike avdelinger og berøringspunkter, kan du øke tilliten til at endringene du gjør i markedsføringen, er statistisk signifikante og har en positiv innvirkning på bunnlinjen.

Eksempler på bruksområder

- A/B-testing av sosiale medier: Tidspunkt for publisering, innholdsformater, kreative annonsevariasjoner, målgruppemålretting, kampanjemeldinger

- A/B-testing av markedsføring: E-postkampanjer, destinasjonssider, annonsetekster og -kreativer, oppfordringsknapper, skjemadesign

- A/B-testing av nettsteder: Navigasjonsdesign, sideoppsett, innholdspresentasjon, betalingsprosesser, søkefunksjonalitet

Men du kan bare skalere programmet ditt hvis det bygger på en test-og-lær-tankegang. Slik bygger du en kultur for eksperimentering:

1. Forankring i ledelsen

- Demonstrer verdien gjennom tidlige gevinster

- Del suksesshistorier

- Knytt resultatene til virksomhetens mål

2. Styrking av teamet

- Tilby nødvendige verktøy

- Tilby opplæring

- Oppmuntre til hypotesegenerering

3. Integrering av prosesser

- Gjør test til en del av arbeidsflyten i utviklingsprosessen

- Utarbeid tydelige protokoller for testing

- Dokumenter og del erfaringer

Måling av A/B-testing

A/B-testing krever analyseverktøy som kan spore flere typer beregninger og samtidig koble seg til datalageret for å få dypere innsikt.

Her er hva du kan begynne med å måle:

- Primære suksessberegninger: Konverteringsrate, klikkfrekvens, inntekt per besøkende, gjennomsnittlig ordreverdi

- Støttende indikatorer: Tid på siden, fluktfrekvens, sider per økt, brukerreisemønstre

- Teknisk ytelse: Innlastingstid, feilfrekvens, mobilrespons, nettleserkompatibilitet

Det som virkelig utgjør forskjellen, er warehouse native analytics. Det gir deg full kontroll over hvor dataene befinner seg, ved at du kan beholde dataene fra testene internt. I tillegg kan du teste mot reelle forretningsresultater og aktivere automatiserte kohortanalyser. Det gir sømløs test på tvers av kanaler med én sannhetskilde, samtidig som du opprettholder streng datastyring og samsvar.

Forstå resultatene av A/B-tester

Resultatene av testene varierer avhengig av type virksomhet og mål. Mens e-handelsnettsteder for eksempel fokuserer på kjøpsmålinger, kan B2B-virksomheter prioritere måling av leadgenerering. Uansett hva du fokuserer på, bør du starte med klare mål før du starter testen.

Hvis du for eksempel tester en CTA-knapp, vil du se

- Antall besøkende som så hver versjon

- Klikk på hver variant

- Konverteringsrate (prosentandel av besøkende som klikket)

- Statistisk signifikans av forskjellen

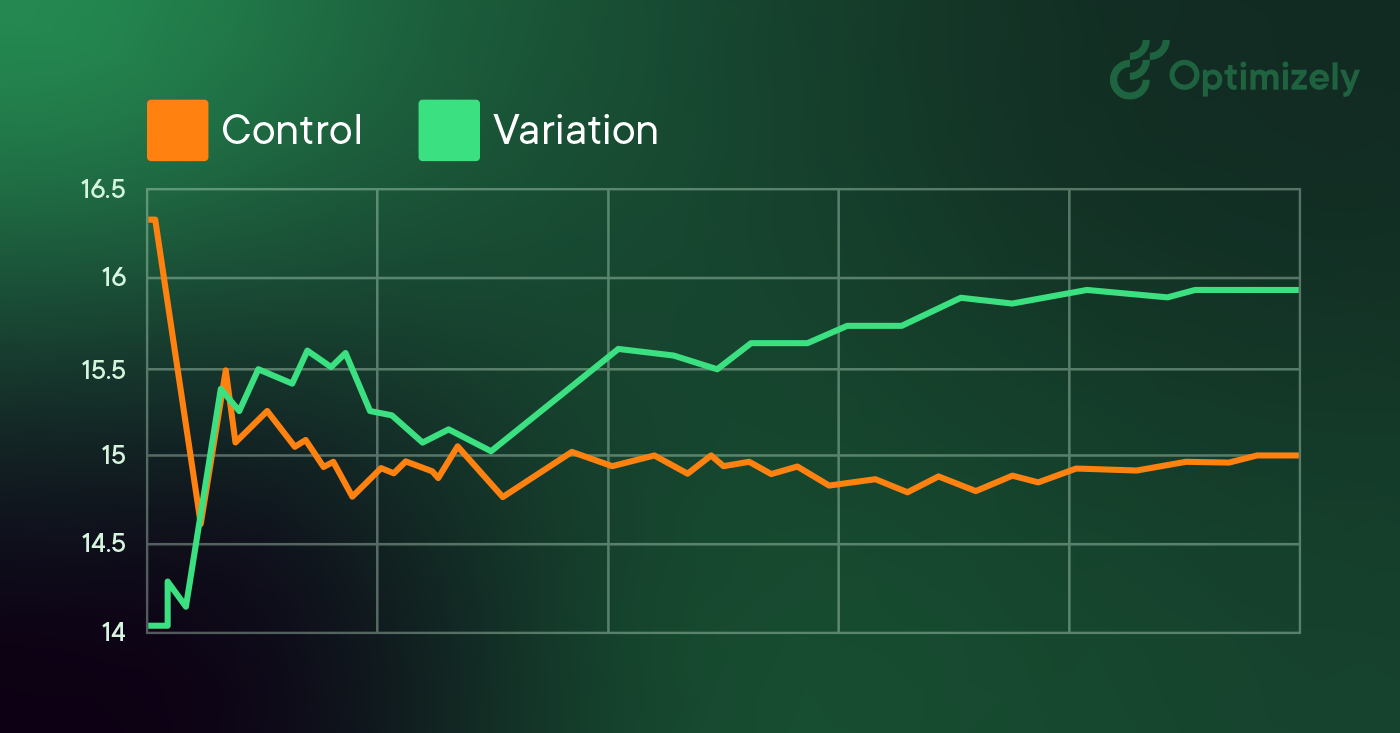

Når du kjører A/B-tester og analyserer resultatene, forteller statistisk signifikans deg om testresultatene dine er pålitelige eller om det bare er tilfeldigheter.

Når du analyserer resultatene:

- Sammenlign med baseline (A-versjonen)

- Se etter en statistisk signifikant forbedring

- Vurder den praktiske effekten av forbedringen

- Sjekk om resultatene stemmer overens med andre beregninger

Segmentering av A/B-tester

Større nettsteder og apper bruker ofte segmentering i A/B-tester. Hvis antallet besøkende er høyt nok, er dette en verdifull måte å teste endringer på for spesifikke grupper av besøkende. Et vanlig segment som brukes til A/B-tester, er å dele opp nye besøkende og tilbakevendende besøkende. På denne måten kan du teste endringer i elementer som bare gjelder for nye besøkende, for eksempel påmeldingsskjemaer.

På den annen side er en vanlig feil ved A/B-testing å opprette for små målgrupper for testene. Derfor bør du

- Segmenter bare når du har tilstrekkelig trafikk

- Begynn med vanlige segmenter (nye vs. tilbakevendende besøkende)

- Sørg for at segmentstørrelsen støtter statistisk signifikans

- Unngå å opprette for mange små segmenter som kan føre til falske positive resultater

A/B-testing og SEO

Google tillater og oppmuntrer til A/B-testing og har uttalt at det å utføre en A/B- eller multivariat test ikke utgjør noen iboende risiko for nettstedets søkerangering. Det er imidlertid mulig å sette søkerangeringen i fare ved å misbruke et verktøy for A/B-testing, for eksempel til maskering. Google har formulert noen beste fremgangsmåter for å sikre at dette ikke skjer:

-

Ingen tilsløring: Cloaking er å vise søkemotorer annet innhold enn det en vanlig besøkende vil se. Cloaking kan føre til at nettstedet ditt blir degradert eller til og med fjernet fra søkeresultatene. For å unngå cloaking må du ikke misbruke besøkssegmentering til å vise forskjellig innhold til Googlebot basert på brukeragent eller IP-adresse.

-

Bruk rel="canonical": Hvis du kjører en delt test med flere nettadresser, bør du bruke rel="canonical "-attributtet for å peke variasjonene tilbake til den opprinnelige versjonen av siden. På denne måten unngår du at Googlebot blir forvirret av flere versjoner av samme side.

-

Bruk 302-viderekoblinger i stedet for 301: Hvis du kjører en test som omdirigerer den opprinnelige URL-en til en variasjons-URL, bør du bruke en 302-omdirigering (midlertidig) i stedet for en 301-omdirigering (permanent). Dette forteller søkemotorer som Google at viderekoblingen er midlertidig, og at de bør beholde den opprinnelige URL-en indeksert i stedet for test-URL-en.

Et medieselskap ønsker kanskje å øke antall lesere, øke tiden leserne tilbringer på nettstedet og forsterke artiklene sine med sosial deling. For å nå disse målene kan de teste variasjoner på:

- Påmeldingsmoduler for e-post

- Anbefalt innhold

- Knapper for sosial deling

Et reiseselskap ønsker kanskje å øke antallet vellykkede bestillinger som gjennomføres på nettstedet eller i mobilappen, eller å øke inntektene fra tilleggskjøp. For å forbedre disse målene kan de teste ulike varianter av:

- Søkemodaler på startsiden

- Søkeresultatside

- Presentasjon av tilleggsprodukter

Et e-handelsselskap ønsker kanskje å forbedre kundeopplevelsen, noe som kan resultere i en økning i antall fullførte kjøp, gjennomsnittlig ordreverdi eller økt høytidssalg. For å oppnå dette kan de A/B-teste:

- Kampanjer på hjemmesiden

- Navigasjonselementer

- Komponenter i betalingstrakten

En teknologibedrift ønsker kanskje å øke antallet leads av høy kvalitet til salgsteamet, øke antallet brukere av gratis prøveversjoner eller tiltrekke seg en bestemt type kjøpere. De kan teste

- Felt i leadsskjemaet

- Registreringsflyt for gratis prøveperiode

- Budskap og oppfordring til handling på hjemmesiden

Tre ting du kan lære

Du kan bruke A/B-tester i ditt eget program:

- Du kan ikke rasjonalisere kundeatferd.

- Ingen idé er for stor, for smart eller for "best practice" til at den ikke kan testes.

- Å redesigne et nettsted helt fra bunnen av er ikke veien å gå. Gå spesifikt til verks, men begynn i det små.

Husk at testing er en utrolig verdifull mulighet til å lære hvordan kundene samhandler med nettstedet ditt. Begynn nå med Optimizely Web Experimentation.

Kanskje du synes det er interessant: AI-eksperimentering (fra idé til resultat)